Normalizing image data before training

Published:

Intro

I had completely forgotten to normalize the images I’m feeding into MaLPi’s network, so I thought I’d try to be a bit more formal about it than my usual.

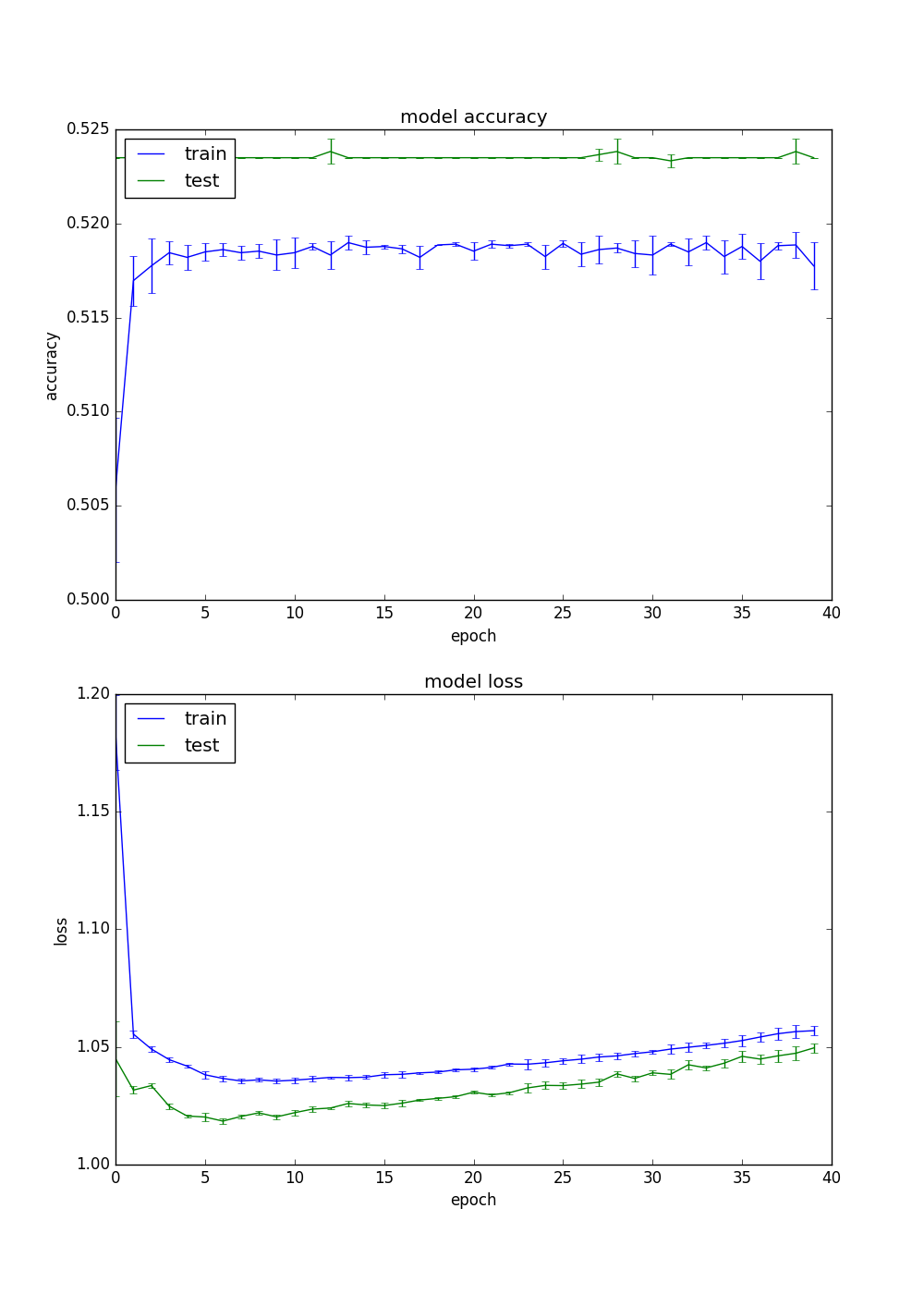

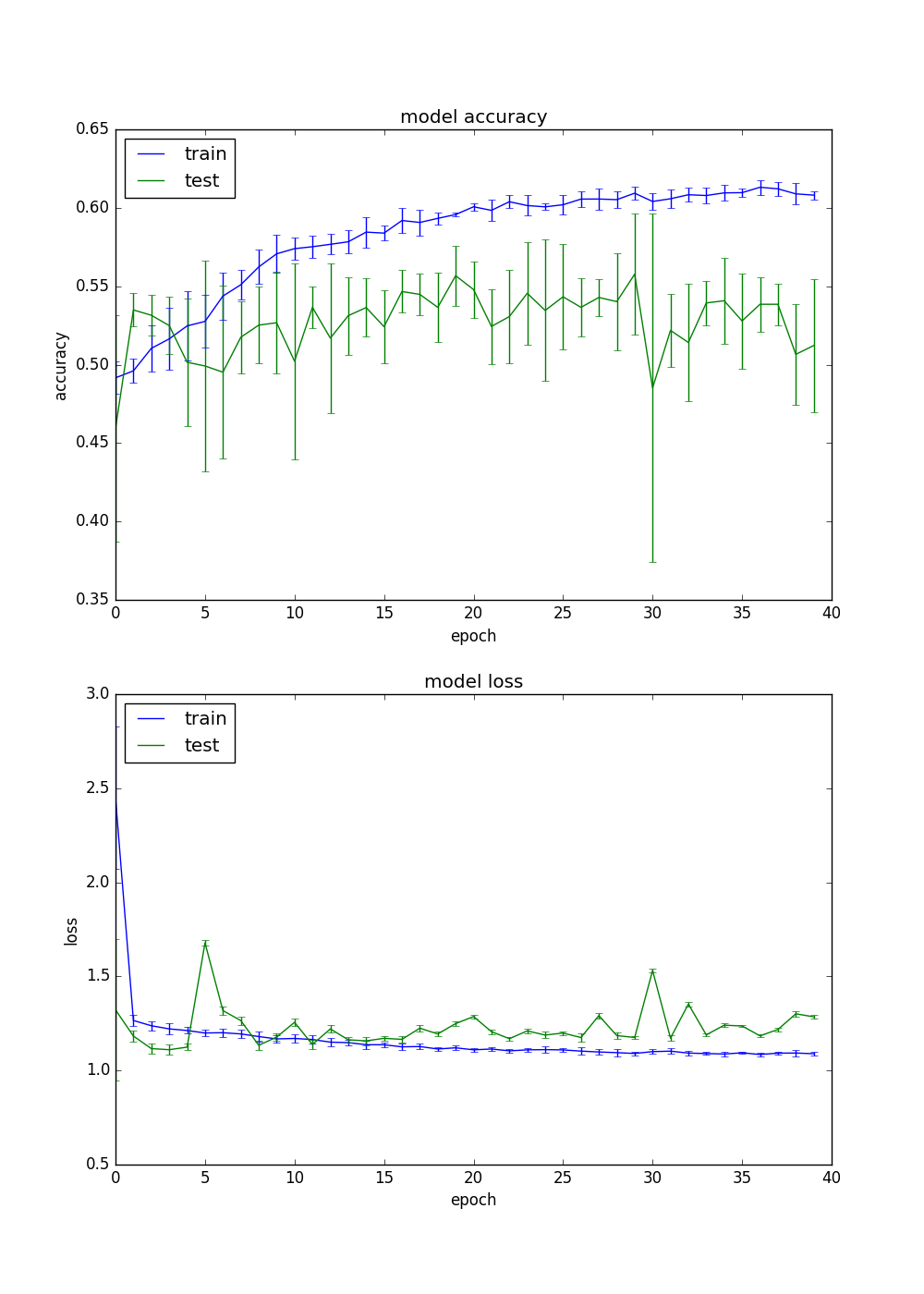

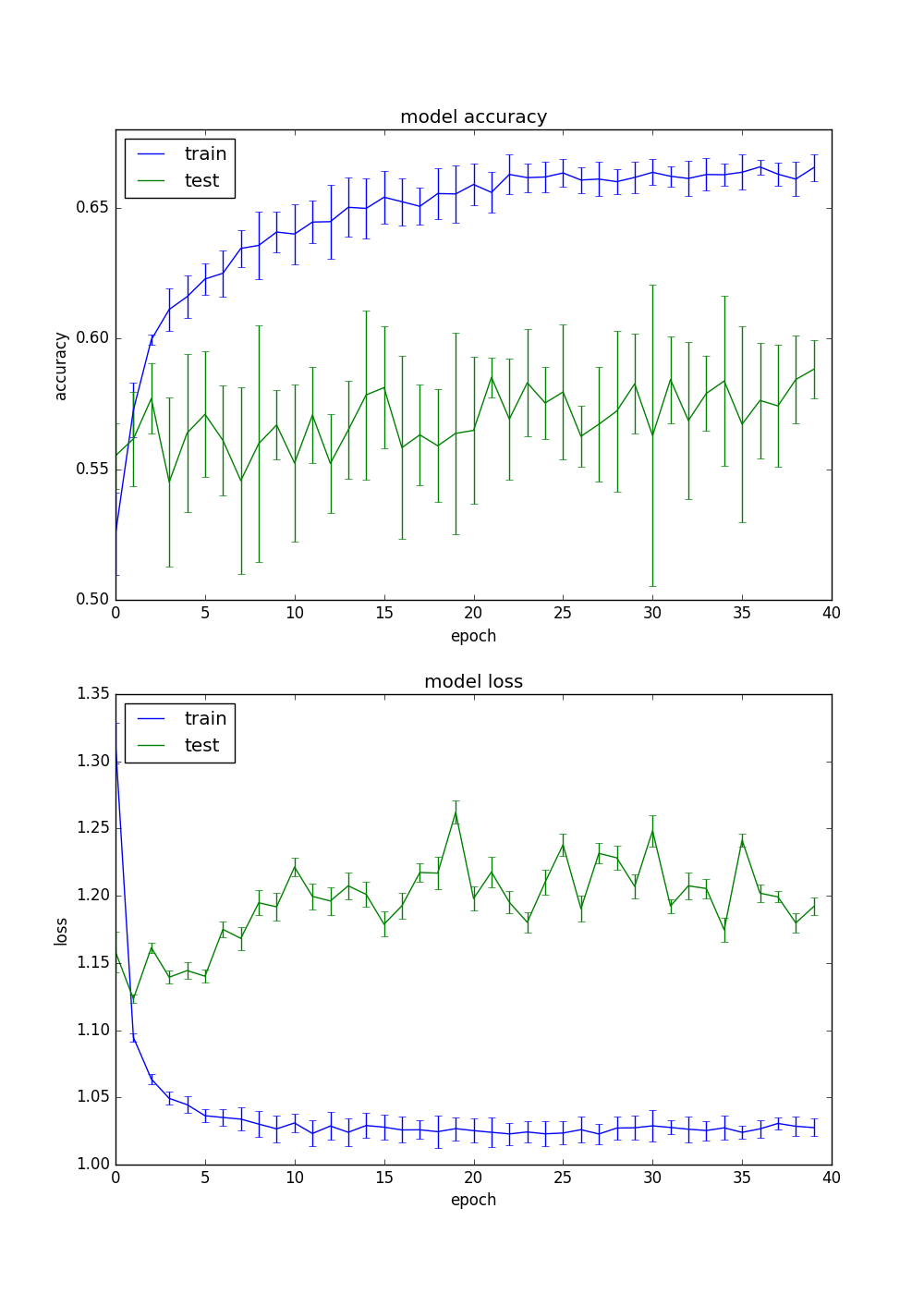

Below are results from three different image preprocessing methods, each trained five times with five different weight initializations and five different batch randomizations. Lines are the mean of the five runs, with error bars showing one standard deviation. ‘test’ in the plots below is actually the results on validation data generated using Keras’s validation_split argument to the fit method.

Methods and Results

First is my initial code with no normalization, just dividing by 255 to get values from 0.0 to 1.0.

# After loading images from multiple directories into the list 'images'

images = np.array(images)

images = images.astype(np.float) / 255.0

Second is centering the mean on zero, separately for each color band.

# After loading images from multiple directories into the list 'images'

images = np.array(images)

images = images.astype(np.float)

images[:,:,:,0] -= np.mean(images[:,:,:,0])

images[:,:,:,1] -= np.mean(images[:,:,:,1])

images[:,:,:,2] -= np.mean(images[:,:,:,2])

Third is the above plus dividing each color band by its standard deviation.

# After loading images from multiple directories into the list 'images'

images = np.array(images)

images = images.astype(np.float)

images[:,:,:,0] -= np.mean(images[:,:,:,0])

images[:,:,:,1] -= np.mean(images[:,:,:,1])

images[:,:,:,2] -= np.mean(images[:,:,:,2])

images[:,:,:,0] /= np.std(images[:,:,:,0])

images[:,:,:,1] /= np.std(images[:,:,:,1])

images[:,:,:,2] /= np.std(images[:,:,:,2])

The code used to run these experiments was this commit. I manually changed the lines listed above for the three separate runs. Total number of training samples was approximately 7000.

Conclusions

While I would prefer to focus on validation accuracy as my main metric, I can’t because my model apparently isn’t generalizing at all. Considering these were all trained on only about seven thousand samples, I’m not surprised.

Go figure, but normalization of the inputs helps. I was a bit surprised that dividing by the standard deviation helped. According to the CS231n notes on Data Preprocessing it shouldn’t help much.

Models

Future

- Collect more data.