First Real DAgger Results

Published:

Intro

From Initial DAgger Results:

- Collect data with the current neural network

- Have the expert fix the new data

- Retrain with all data collected so far

- Repeat

I ran three iterations of the above loop.

Methods and Results

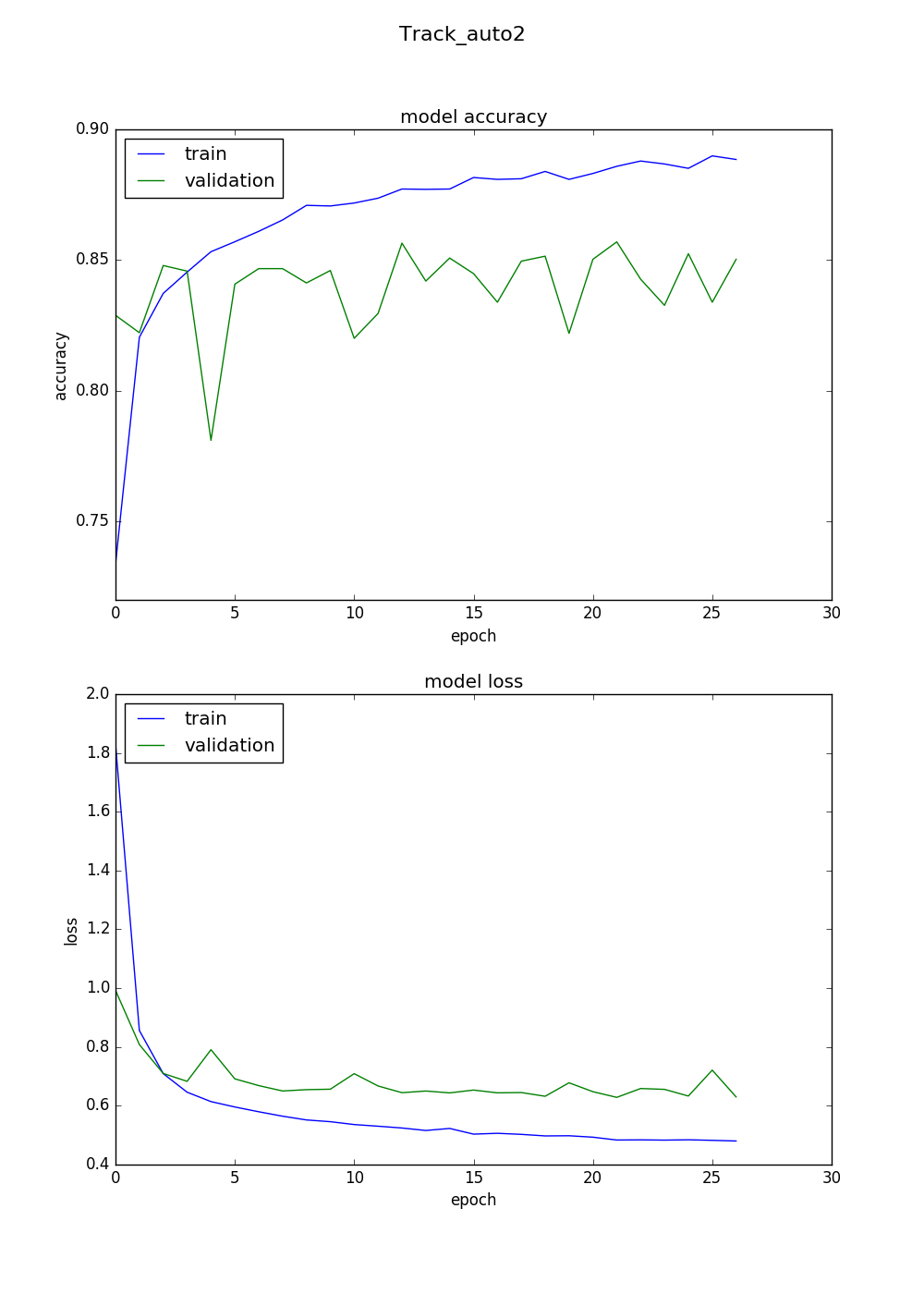

I was pressed for time when training Auto 2, so I only ran it once and don’t have mean and standard deviation for that one.

Conclusions

From the point of view of the validation loss and/or accuracy it looks like DAgger is not improving things much. That isn’t too surprising, though, since all the network is doing is trying to imitate the driver and that shouldn’t change too much just by adding data collected while trying to mimic the driver.

What I need is some objective way to measure how well each model is performing. Something like time to make it around the track, or how few times it goes off the track each lap. Easier to measure, but less accurate, would be how long each drive is. When I’m letting the neural net drive I stop the recording as soon as the car goes off the track, so longer recordings means the model was driving better. However, there could be other factors, like sometimes the Raspberry Pi will reboot mid-drive.

Future

Find a better way than loss or training/validation accuracy to measure each neural net’s performance.