Initial DAgger Results

Published:

Intro

DAgger (Dataset Aggregation) is an algorithm that tries to get around some issues with imitation learning. In imitation learning a neural network is trained to mimic an ‘expert’ who provides training data. In MaLPi’s case that means me manually driving the car around a racetrack, collecting images and actions as I do. The neural network will never be perfect, however, and each time it makes an error can push it further away from the distribution of states on which it was trained, quite possibly leading to more and more errors. Usually leading to it racing off the track toward a wall or other obstacle.

With DAgger, the idea is to use enough expert training to start to get reasonable behavior, then start collecting new data while the neural network drives itself. Errors made during these drives are corrected after the fact by the ‘expert’. That way the types of errors the neural net is likely to make will be in the training set from then on, and hopefully less likely to occur.

- Collect data with the current neural network

- Have the expert fix the new data

- Retrain with all data collected so far

- Repeat

Methods and Results

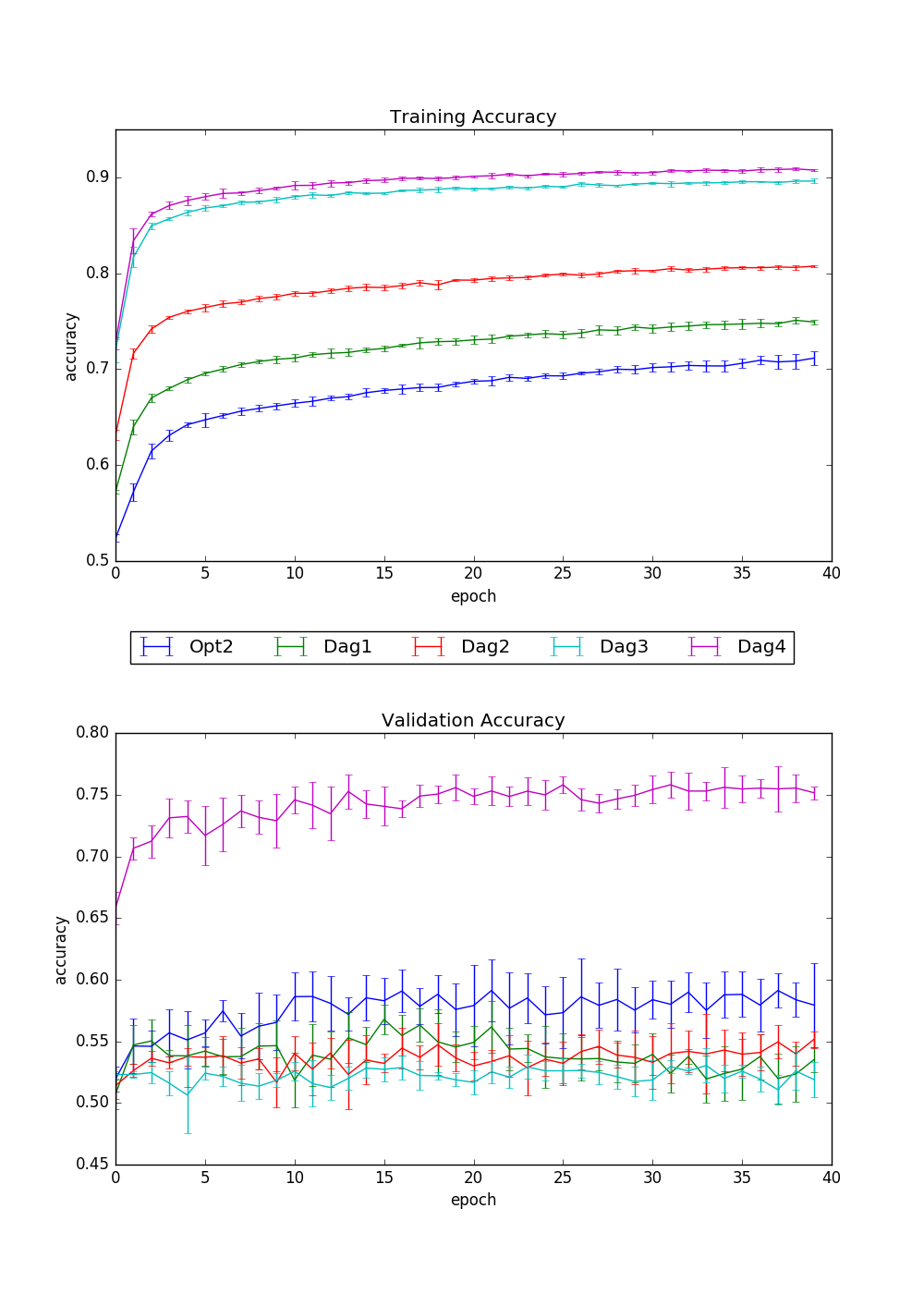

My baseline for this was ‘opt 2’ from my Hyperparameter Optimization post. Training accuracy was about 70% and validation was between 55% and 60%. ‘Dag1’ through ‘Dag4’ was me going through all of the racetrack data I’d collected so far and cleaning it up. I deleted some drives that just had bad data and I manually changed actions whenever there was a stop command, or when MaLPi went off course. Both of those types of errors are common, partly because my web based controls are not easy to use, partly because I’m just a bad driver, and partly because of a bug that causes MaLPi to not respond to commands for up to several seconds at a time.

Each Dagger case was me fixing ~25% of the current data.

Conclusions

Training accuracy went up nicely with each quarter of the data that I fixed. Validation accuracy did not go up, and possibly even got worse until I had finished going through the entire dataset. At that point, though, there was a very large improvement, with validation accuracy as high as 75%.

Future

Technically these results don’t show anything about the DAgger algorithm itself, since none of the data samples used here were collected while the neural net was driving. That will be next.